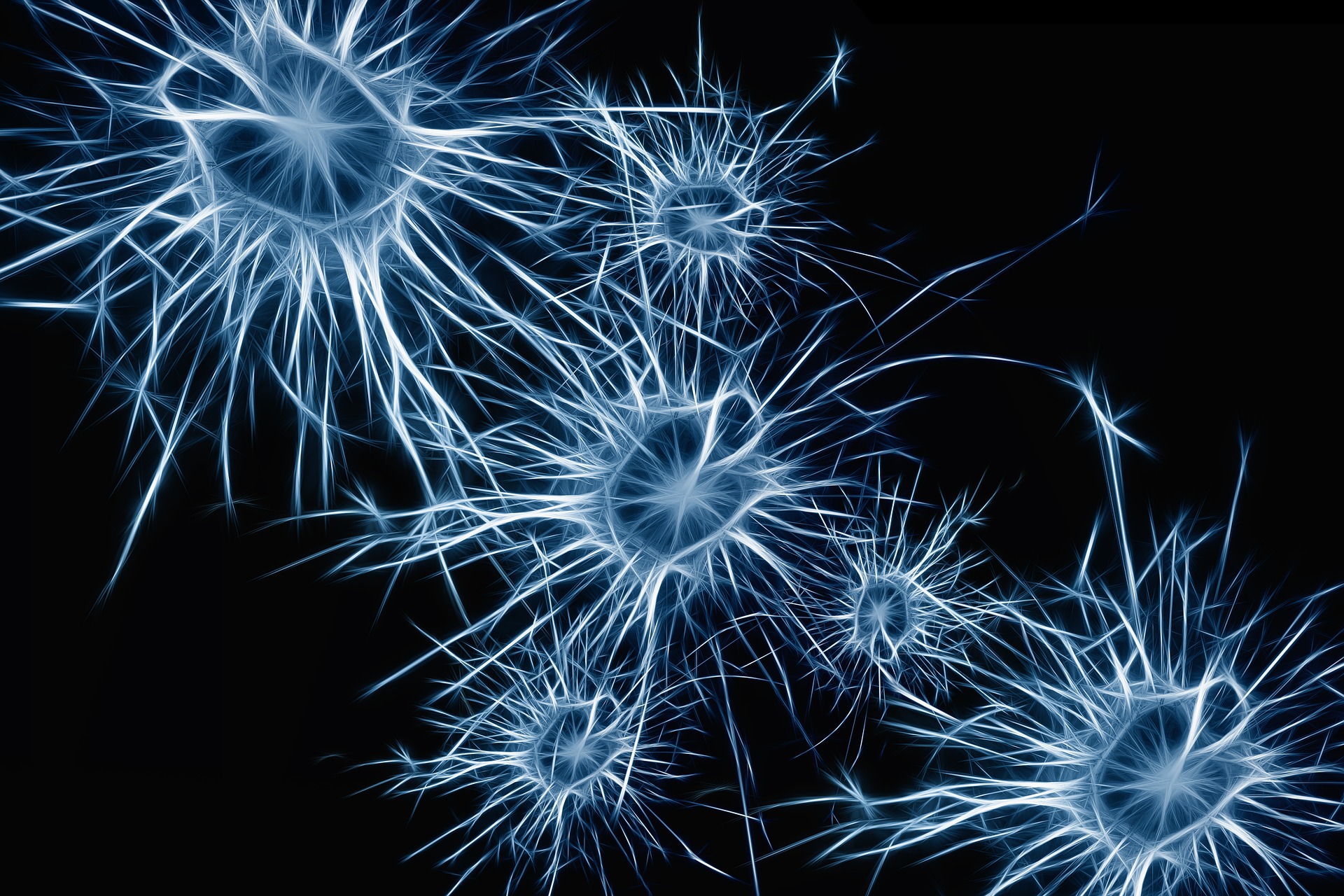

Collaborative Analytics

Predictive Analysis Without Integrating Data

CONNECT THE ANALYTICS

Extract global insights from distributed data

ELIMINATE THE COSTS OF DATA INTEGRATION

Analyze across silos while processing data organically where it resides

QUANTIFY THE VALUE OF INFORMATION

Prioritize networked sources to answer specific questions

MITIGATE PRIVACY RISKS

Never transmit, collect together, or have to govern sensitive data

EXTEND YOUR INFORMATION HORIZON

Leverage external sources of data you could never integrate

FUTURE-SCALE

Abstract system details with a scalable analytics fabric

KEEP PACE WITH CHANGING DATA

Adapt with agile model updates and real-time predictions

INCREASE COMPETITIVENESS

Accelerate workflows to optimize data, algorithms, and parameters

BREAK FREE FROM INFRASTRUCTURE CONSTRAINTS

Continuously analyze over dynamic wide area networks

MARKET YOUR INFORMATION

Monetize your data without ever compromising it

INVEST IN DATA ANALYTICS WITH CONFIDENCE

Realize the predictive value of distributed data at scale